🛑 Breaking Update (Jan 5, 2026): GitHub just rolled out critical updates to its Copilot API and changed repository limits for free tiers. If you are relying on older CI/CD pipelines, this update might break your workflow. Read the full analysis below.

At A Glance

Doe v. GitHub challenges whether AI coding tools like GitHub Copilot can train on public open source code and output similar code without preserving licenses or copyright notices. The case centers on copyright infringement, open source license violations, and DMCA Section 1202. By 2026, the lawsuit is shaping new compliance standards for AI-generated code, forcing developers and SaaS companies to adopt stricter IP risk controls.

Key Takeaways

- Doe v. GitHub reshapes AI copyright risk

- DMCA 1202 is the strongest legal lever

- Open source compliance now includes AI outputs

- SaaS founders must treat AI code as a regulated asset

- Waiting for final rulings is not a strategy

Contents

Executive Summary

The Doe v. GitHub lawsuit is one of the most important AI copyright cases to date. It asks a simple but disruptive question:

Can an AI trained on open source code legally generate new code without carrying the original license or attribution?

Filed as a class action against GitHub, Microsoft, and OpenAI, the case targets GitHub Copilot and similar AI coding tools. Plaintiffs argue that these systems were trained on millions of open source repositories and now output code that strips copyright notices and license terms.

As we move into 2026, the case is no longer just about Copilot. It is reshaping how AI companies, SaaS founders, and developers think about:

- Open source license compliance

- AI training data governance

- DMCA Section 1202 liability

- Software IP risk assessment

This article explains the case in plain language, analyzes the core legal arguments, and translates them into practical guidance.

What Is Doe v. GitHub? (Plain-English Summary)

Doe v. GitHub is a U.S. class action lawsuit filed by anonymous software developers (“Doe”) on behalf of open source contributors.

Defendants

- GitHub

- Microsoft

- OpenAI

Product at Issue

- GitHub Copilot, an AI coding assistant powered by OpenAI models

Core Claim

Copilot was trained on public GitHub repositories and sometimes outputs code that is substantially similar to licensed open source code, without:

- Including copyright notices

- Preserving license terms (GPL, MIT, Apache, etc.)

- Providing attribution

The plaintiffs argue this violates copyright law and the DMCA.

Why This Case Matters More in 2026

In 2022, Copilot was novel. In 2026, AI-generated code is everywhere.

Today:

- AI writes production code

- Startups ship AI-assisted SaaS products weekly

- Enterprises rely on AI coding tools internally

That scale transforms a niche copyright dispute into a systemic IP risk.

The Core Legal Arguments (Explained Simply)

1. Copyright Infringement

Claim:

Training an AI on copyrighted code and reproducing similar outputs is infringement.

Defense:

The defendants argue:

- Training is a transformative use

- Outputs are statistically generated, not copied

Reality check:

Courts are skeptical of blanket “transformative” claims when outputs resemble protected expression.

2. Open Source License Violations

This is the most developer-relevant issue.

The Key Question

Does Copilot violate GPL and other open source licenses?

Many open source licenses require:

- Attribution

- License inclusion

- Share-alike obligations (GPL)

Plaintiffs argue Copilot outputs code without any of these.

Defendants counter that:

- The model does not “know” licenses

- Outputs are not derivative works

This unresolved question sits at the center of the AI open source legal battle in 2026.

3. DMCA Section 1202 (Copyright Management Information)

This claim survived early dismissal attempts and is critical.

Section 1202 prohibits:

- Removing or altering copyright management information (CMI)

- Distributing works knowing CMI was removed

Plaintiffs argue Copilot:

- Learned from code with copyright headers

- Outputs similar code without those headers

That, they claim, is automated CMI removal.

This theory has gained traction because it avoids harder questions about direct copying.

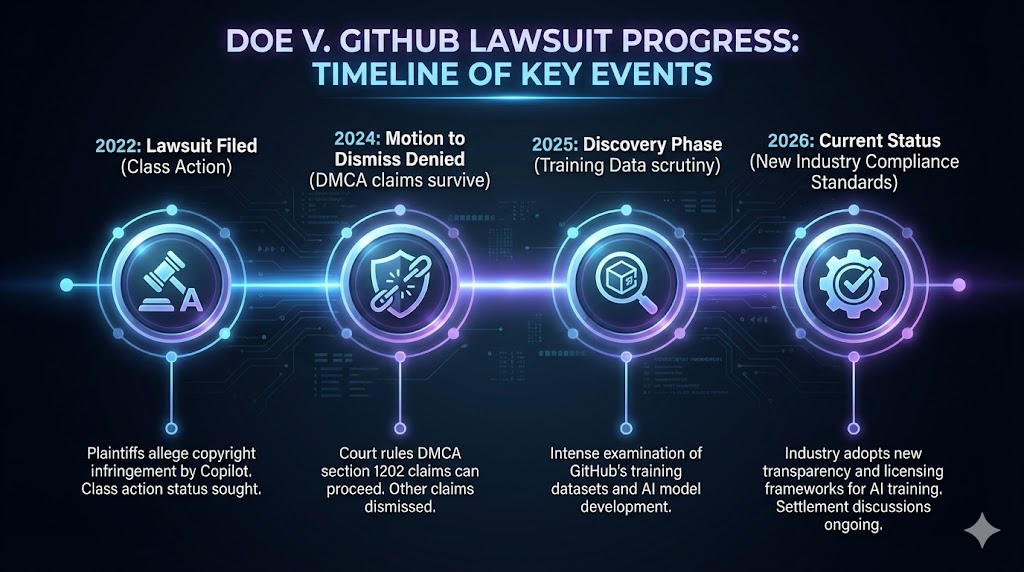

2026 Update: Where the Case Stands

What we know (as of early 2026):

- Several claims survived motions to dismiss

- Courts signaled openness to DMCA 1202 arguments

- Discovery focused on training data and output similarity

What is still uncertain:

- Final rulings on fair use

- Whether AI training itself is infringing

- The standard for “substantial similarity” in AI outputs

Why this matters:

Even without a final verdict, the litigation has already changed industry behavior.

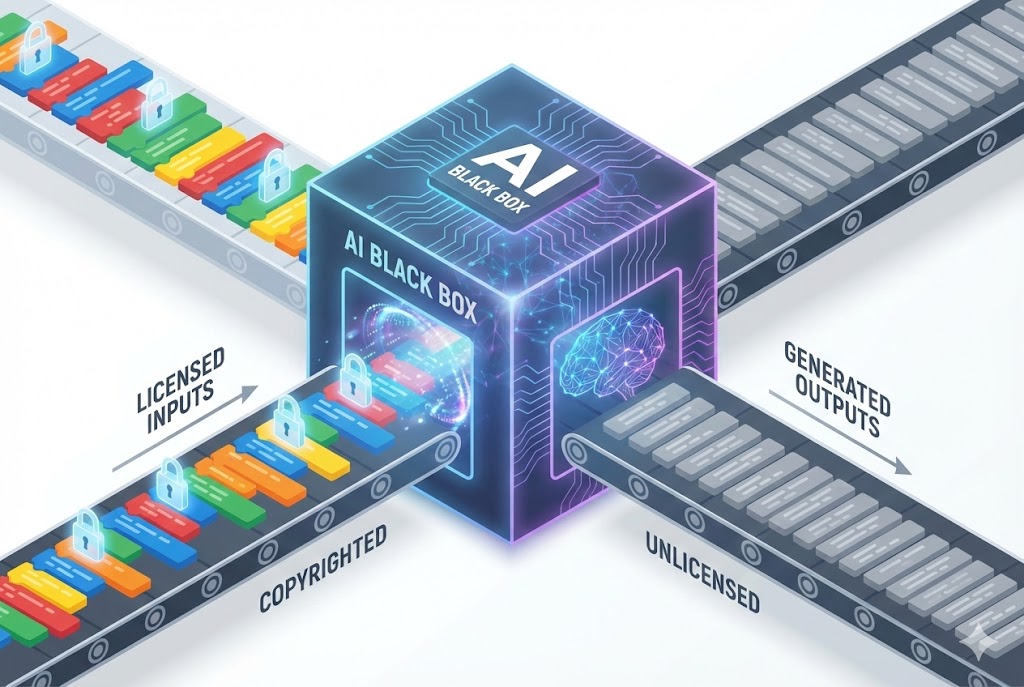

How AI-Generated Code Creates Legal Risk

Simple Flowchart (Conceptual)

Open Source Code (GPL/MIT/Apache)

↓

AI Model Training

↓

Statistical Pattern Learning

↓

AI-Generated Code Output

↓

No License + No Attribution

↓

Potential Copyright + DMCA RiskThis is the compliance gap regulators and courts are now focused on.

Practical Example: Copilot and GPL Code

Scenario:

- A developer uses Copilot

- Copilot outputs a function nearly identical to GPL-licensed code

- The developer ships it in proprietary software

Potential consequences:

- GPL contamination claims

- DMCA Section 1202 exposure

- Contractual breach with enterprise customers

This is no longer theoretical. Software copyright attorneys now see these issues in audits.

While GitHub focuses on cloud-based AI, Apple is moving everything offline. See how this impacts developers in our breakdown of Apple’s Hidden AI Patents 2025.

Implications for SaaS Founders

SaaS IP Risk Assessment in 2026

If your product uses AI-generated code, investors and acquirers will ask:

- Do you track AI-assisted code generation?

- Do you scan outputs for license conflicts?

- Can you document training data sources?

Failure to answer these questions now delays funding and exits later.

Copyright lawsuits are just one part of the puzzle. To fully secure your platform, you need a layered approach. Read our guide on Is Your SaaS Code Safe? Copyright vs. Patent vs. Trade Secrets.

Practical Advice for Developers

Developer’s Checklist: How to Protect Your Repo from AI Training in 2026

- Add explicit AI training restrictions in LICENSE files

- Use repository metadata to signal “no AI training”

- Monitor model scraping disclosures

- Document AI-assisted code generation internally

- Run open source compliance scans on AI outputs

No method is perfect, but layered defenses reduce risk.

Comparison Table: Traditional Coding vs AI-Assisted Coding

| Factor | Traditional Coding | AI-Assisted Coding |

| Code Origin | Human-written | Probabilistic output |

| License Awareness | Explicit | Often none |

| Attribution | Manual | Frequently missing |

| Legal Risk | Known | Emerging |

| Auditability | High | Medium to low |

USPTO Patent Eligibility Context

While Doe v. GitHub is a copyright case, it intersects with patent law.

Under current USPTO guidelines:

- Abstract ideas implemented by AI are not patentable alone

- Human contribution remains critical

- AI-generated code does not qualify as an inventor

Why this matters:

Companies cannot rely on patents to shield them from copyright exposure tied to AI-generated code.

While copyright protects the code itself, patenting the underlying logic is harder. Make sure your invention doesn’t fall into the ‘abstract idea’ trap by reading Surviving the ‘Alice’ Nightmare.

Expert Quote

“The real risk is not that AI writes code. The risk is that it writes code with no legal memory.”

— U.S. Software Copyright Attorney, Silicon Valley

Future Outlook: What Changes After 2026

Expect:

- AI license-aware models

- Output attribution systems

- Mandatory AI training disclosures

- Contractual AI indemnities in SaaS deals

The direction is clear even if the final ruling is not.

As AI tools evolve from simple assistants to fully autonomous agents, liability shifts even further. Learn who is responsible when AI acts alone in our analysis: Agentic AI & IP Laws 2026: Who Owns the Code?.

Disclaimer

This article is for informational purposes only and does not constitute legal advice. Consult a qualified software copyright attorney for guidance specific to your situation.

Podcast

FAQs

Does Copilot violate GPL licenses?

Courts have not ruled definitively. The risk depends on output similarity and use context.

Is AI training on open source code illegal?

Not per se. The legal risk increases when outputs reproduce protected expression without attribution.

What is DMCA Section 1202 and why does it matter?

It prohibits removing copyright information. Plaintiffs argue AI outputs do exactly that.

Should companies ban AI coding tools?

Most do not ban them outright. Instead, they implement compliance controls.

Add comment